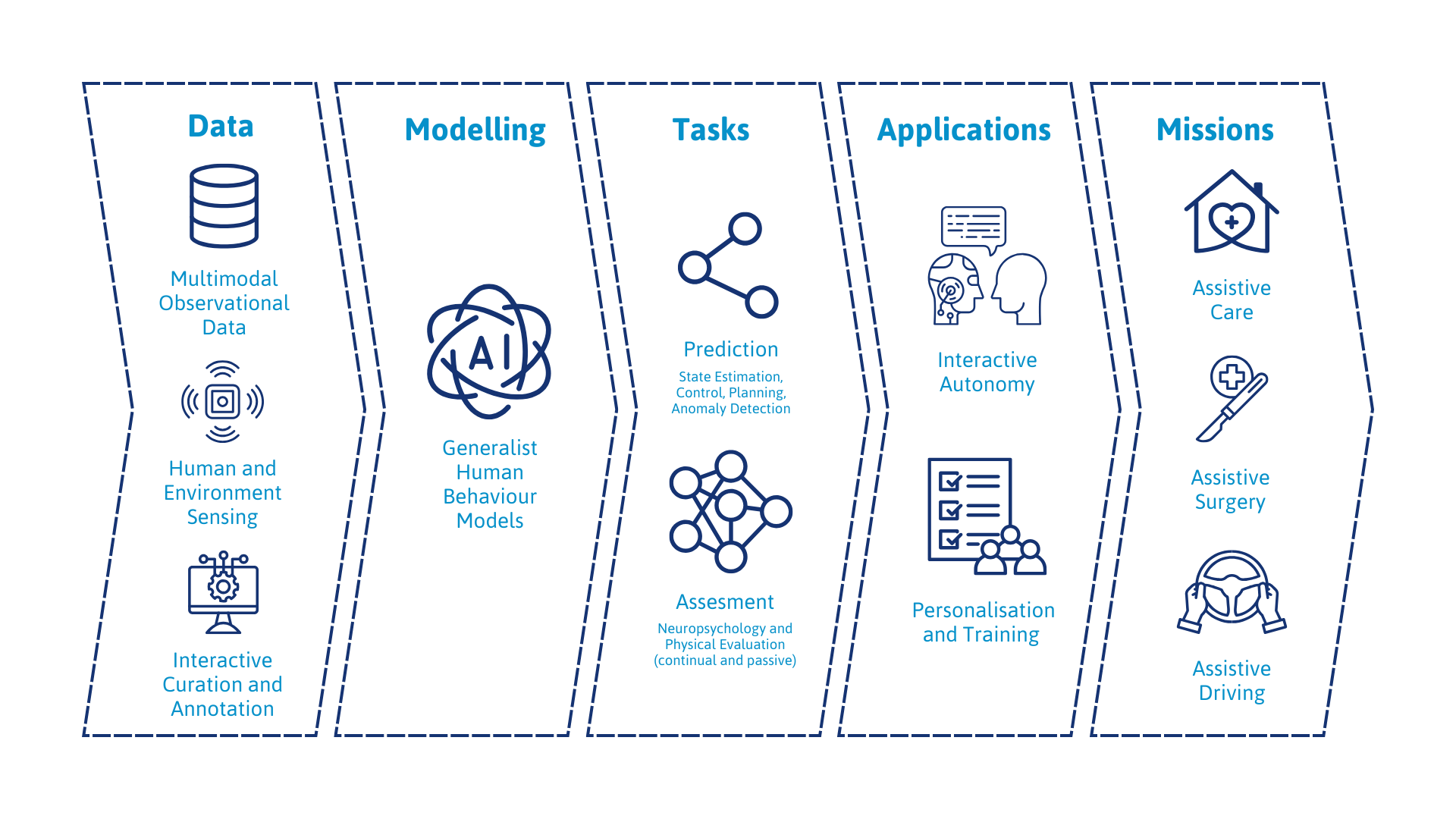

Our research advances the fundamental mechanisms of learning, adaptation, and control that drive autonomous behaviour. We develop robotic systems capable of learning from human instruction, engaging in embodied dialogue, and collaborating seamlessly with human partners. As these systems become more capable, we remain committed to responsible development — embedding safety, trust, and transparency.

We combine fundamental research in AI and cognitive science with the engineering of complete, deployable systems that address real-world challenges in healthcare and mobility. This includes long-standing research interests in:

- Generative modelling of human behaviour

- Interactive compositional learning and machine teaching

- Cognitive-aware assessment and training

- Human-robot collaboration

- Safe and trustworthy shared autonomy frameworks

Together, these capabilities enable the creation of intelligent systems that are not only autonomous, but also genuinely interactive, interpretable, and aligned with human needs.

Featured Publications

Publications

2025

Heuristic Adaptation of Potentially Misspecified Domain Support for Likelihood-Free Inference in Stochastic Dynamical Systems Working paper

Georgios Kamaras, Craig Innes, Subramanian Ramamoorthy

2025.

@workingpaper{Kamaras2025,

title = {Heuristic Adaptation of Potentially Misspecified Domain Support for Likelihood-Free Inference in Stochastic Dynamical Systems},

author = {Georgios Kamaras and Craig Innes and Subramanian Ramamoorthy },

url = {https://arxiv.org/abs/2510.26656},

year = {2025},

date = {2025-10-30},

abstract = {In robotics, likelihood-free inference (LFI) can provide the domain distribution that adapts a learnt agent in a parametric set of deployment conditions. LFI assumes an arbitrary support for sampling, which remains constant as the initial generic prior is iteratively refined to more descriptive posteriors. However, a potentially misspecified support can lead to suboptimal, yet falsely certain, posteriors. To address this issue, we propose three heuristic LFI variants: EDGE, MODE, and CENTRE. Each interprets the posterior mode shift over inference steps in its own way and, when integrated into an LFI step, adapts the support alongside posterior inference. We first expose the support misspecification issue and evaluate our heuristics using stochastic dynamical benchmarks. We then evaluate the impact of heuristic support adaptation on parameter inference and policy learning for a dynamic deformable linear object (DLO) manipulation task. Inference results in a finer length and stiffness classification for a parametric set of DLOs. When the resulting posteriors are used as domain distributions for sim-based policy learning, they lead to more robust object-centric agent performance.

},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

Conversational Code Generation: a Case Study of Designing a Dialogue System for Generating Driving Scenarios for Testing Autonomous Vehicles Proceedings Article

Rimvydas Rubavicius, Antonio Valerio Miceli-Barone, Alex Lascarides, Subramanian Ramamoorthy

In: Proceedings of the Generative Code Intelligence Workshop (GeCoIn 2025) co-located with 28th European Conference on Artificial Intelligence (ECAI 2025) , CEUR Workshop Proceedings, 2025.

@inproceedings{rubavicius2025conversationalcodegenerationcase,

title = {Conversational Code Generation: a Case Study of Designing a Dialogue System for Generating Driving Scenarios for Testing Autonomous Vehicles},

author = {Rimvydas Rubavicius and Antonio Valerio Miceli-Barone and Alex Lascarides and Subramanian Ramamoorthy},

url = {https://ceur-ws.org/Vol-4075/paper7.pdf

https://arxiv.org/abs/2410.09829},

year = {2025},

date = {2025-10-26},

urldate = {2025-09-03},

booktitle = {Proceedings of the Generative Code Intelligence Workshop (GeCoIn 2025) co-located with 28th European Conference on Artificial Intelligence (ECAI 2025)

},

volume = {4075},

publisher = {CEUR Workshop Proceedings},

abstract = {Cyber-physical systems like autonomous vehicles are tested in simulation before deployment, using domain-specific programs for scenario specification. To aid the testing of autonomous vehicles in simulation, we design a natural language interface, using an instruction-following large language model, to assist a non-coding domain expert in synthesising the desired scenarios and vehicle behaviours. We show that using it to convert utterances to the symbolic program is feasible, despite the very small training dataset. Human experiments show that dialogue is critical to successful simulation generation, leading to a 4.5 times higher success rate than a generation without engaging in extended conversation.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

ROOM: A Physics-Based Continuum Robot Simulator for Photorealistic Medical Datasets Generation Working paper

Salvatore Esposito, Matías Mattamala, Daniel Rebain, Francis Xiatian Zhang, Kevin Dhaliwal, Mohsen Khadem, Subramanian Ramamoorthy

2025.

@workingpaper{Espositoetal2025,

title = {ROOM: A Physics-Based Continuum Robot Simulator for Photorealistic Medical Datasets Generation},

author = {Salvatore Esposito and Matías Mattamala and Daniel Rebain and Francis Xiatian Zhang and Kevin Dhaliwal and Mohsen Khadem and Subramanian Ramamoorthy },

url = {https://arxiv.org/abs/2509.13177

https://github.com/iamsalvatore/room},

year = {2025},

date = {2025-09-17},

abstract = {Continuum robots are advancing bronchoscopy procedures by accessing complex lung airways and enabling targeted interventions. However, their development is limited by the lack of realistic training and test environments: Real data is difficult to collect due to ethical constraints and patient safety concerns, and developing autonomy algorithms requires realistic imaging and physical feedback. We present ROOM (Realistic Optical Observation in Medicine), a comprehensive simulation framework designed for generating photorealistic bronchoscopy training data. By leveraging patient CT scans, our pipeline renders multi-modal sensor data including RGB images with realistic noise and light specularities, metric depth maps, surface normals, optical flow and point clouds at medically relevant scales. We validate the data generated by ROOM in two canonical tasks for medical robotics -- multi-view pose estimation and monocular depth estimation, demonstrating diverse challenges that state-of-the-art methods must overcome to transfer to these medical settings. Furthermore, we show that the data produced by ROOM can be used to fine-tune existing depth estimation models to overcome these challenges, also enabling other downstream applications such as navigation. We expect that ROOM will enable large-scale data generation across diverse patient anatomies and procedural scenarios that are challenging to capture in clinical settings.},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

The ACPGBI AI taskforce report: A mixed-methods roadmap for AI in colorectal surgery Journal Article

James M. Kinross, Kyle Lam, Andrew Yiu, Katie Adams, Kiran Altaf, Elaine Burns, Mindy Duffourc, Nicola Eardley, Charles Evans, Stamatia Giannarou, Laura Hancock, Victoria Hu, Ahsan Javed, Shivank Khare, Evangelos Mazomenos, Linnet McGeever, Susan Moug, Piero Nastro, Sebastien Ourselin, Subramanian Ramamoorthy, Campbell Roxburgh, Catherine Simister, Danail Stoyanov, Gregory Thomas, Pietro Valdastri, Marcus Vass, Dale Vimalachandran, Tom Vercauteren, Justin Davies

In: Colorectal Disease, vol. 27, no. 9, 2025.

@article{Kinrossetal2025,

title = {The ACPGBI AI taskforce report: A mixed-methods roadmap for AI in colorectal surgery},

author = {James M. Kinross and Kyle Lam and Andrew Yiu and Katie Adams and Kiran Altaf and Elaine Burns and Mindy Duffourc and Nicola Eardley and Charles Evans and Stamatia Giannarou and Laura Hancock and Victoria Hu and Ahsan Javed and Shivank Khare and Evangelos Mazomenos and Linnet McGeever and Susan Moug and Piero Nastro and Sebastien Ourselin and Subramanian Ramamoorthy and Campbell Roxburgh and Catherine Simister and Danail Stoyanov and Gregory Thomas and Pietro Valdastri and Marcus Vass and Dale Vimalachandran and Tom Vercauteren and Justin Davies},

doi = {10.1111/codi.70232},

year = {2025},

date = {2025-09-16},

urldate = {2025-09-16},

journal = {Colorectal Disease},

volume = {27},

number = {9},

abstract = {Abstract Aim The ACPGBI has commissioned a taskforce to devise a strategy for integrating artificial intelligence (AI) into colorectal surgery. This report aims to (i) map current AI adoption amongst UK colorectal surgeons; (ii) evaluate knowledge, attitudes, perceptions and experience of AI technologies; and (iii) establish priority recommendations to drive innovation across the specialty. Methods A prospective 45-item questionnaire was circulated to the ACPGBI membership. Questionnaire findings were explored at a multidisciplinary round table of surgeons, allied professionals, computer scientists and lawyers. Strategic recommendations were then generated. Results 122 members responded (75.4% consultants; 72.1% male; modal age 41–50 years). Although 43.5% used AI daily, only one third said they could explain key concepts within AI. 86.9% anticipated routine future-AI use, with documentation and imaging ranked highest. 88.5% endorsed formal AI training. Major obstacles were unclear regulation, cost, medicolegal liability and professional or patient distrust. The round table generated 17 recommendations across clinical, educational and research domains and a ten-point action plan, including the establishment of a Colorectal AI Committee and the creation of an open-source colorectal foundational data initiative. Conclusion This taskforce report combines questionnaire insights from the ACPGBI membership and expert debate into 17 key recommendations and a ten-point action plan that will set the direction of future colorectal AI practice. The objective is to establish a framework through which colorectal surgical practice can be augmented by safe, trustworthy AI.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Learning a Neural Association Network for Self-supervised Multi-Object Tracking Proceedings Article

Shuai Li, Michael Burke, Subramanian Ramamoorthy, Juergen Gall

In: 36th British Machine Vision Conference, (BMVC) 24th - 27th November 2025, Sheffield, UK, BMVA Press, 2025.

@inproceedings{li2024learningneuralassociationnetwork,

title = {Learning a Neural Association Network for Self-supervised Multi-Object Tracking},

author = {Shuai Li and Michael Burke and Subramanian Ramamoorthy and Juergen Gall},

url = {https://arxiv.org/abs/2411.11514},

year = {2025},

date = {2025-09-03},

urldate = {2024-01-01},

booktitle = {36th British Machine Vision Conference, (BMVC) 24th - 27th November 2025, Sheffield, UK},

publisher = {BMVA Press},

abstract = {This paper introduces a novel framework to learn data association for multi-object tracking in a self-supervised manner. Fully-supervised learning methods are known to achieve excellent tracking performances, but acquiring identity-level annotations is tedious and time-consuming. Motivated by the fact that in real-world scenarios object motion can be usually represented by a Markov process, we present a novel expectation maximization (EM) algorithm that trains a neural network to associate detections for tracking, without requiring prior knowledge of their temporal correspondences. At the core of our method lies a neural Kalman filter, with an observation model conditioned on associations of detections parameterized by a neural network. Given a batch of frames as input, data associations between detections from adjacent frames are predicted by a neural network followed by a Sinkhorn normalization that determines the assignment probabilities of detections to states. Kalman smoothing is then used to obtain the marginal probability of observations given the inferred states, producing a training objective to maximize this marginal probability using gradient descent. The proposed framework is fully differentiable, allowing the underlying neural model to be trained end-to-end. We evaluate our approach on the challenging MOT17, MOT20, and BDD100K datasets and achieve state-of-the-art results in comparison to self-supervised trackers using public detections.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Beyond Discriminant Patterns: On the Robustness of Decision Rule Ensembles Proceedings Article

Xin Du, Subramanian Ramamoorthy, Wouter Duivesteijn, Jin Tian, Mykola Pechenizkiy

In: IEEE International Conference on Data Mining (ICDM), 2025.

@inproceedings{2109.10432,

title = {Beyond Discriminant Patterns: On the Robustness of Decision Rule Ensembles},

author = {Xin Du and Subramanian Ramamoorthy and Wouter Duivesteijn and Jin Tian and Mykola Pechenizkiy},

url = {https://arxiv.org/abs/2109.10432},

year = {2025},

date = {2025-08-26},

urldate = {2021-09-21},

booktitle = { IEEE International Conference on Data Mining (ICDM)},

abstract = {Local decision rules are commonly understood to be more explainable, due to the local nature of the patterns involved. With numerical optimization methods such as gradient boosting, ensembles of local decision rules can gain good predictive performance on data involving global structure. Meanwhile, machine learning models are being increasingly used to solve problems in high-stake domains including healthcare and finance. Here, there is an emerging consensus regarding the need for practitioners to understand whether and how those models could perform robustly in the deployment environments, in the presence of distributional shifts. Past research on local decision rules has focused mainly on maximizing discriminant patterns, without due consideration of robustness against distributional shifts. In order to fill this gap, we propose a new method to learn and ensemble local decision rules, that are robust both in the training and deployment environments. Specifically, we propose to leverage causal knowledge by regarding the distributional shifts in subpopulations and deployment environments as the results of interventions on the underlying system. We propose two regularization terms based on causal knowledge to search for optimal and stable rules. Experiments on both synthetic and benchmark datasets show that our method is effective and robust against distributional shifts in multiple environments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Evaluating personalized beneficial interventions in the daily lives of older adults using a camera Proceedings Article

Longfei Chen, Robert B. Fisher, Nusa Faric, Jacques Fleuriot, Subramanian Ramamoorthy

In: Daniele Cafolla, Timothy Rittman, Hao Ni (Ed.): Artificial Intelligence in Healthcare (AIiH), pp. 131-141, Springer Nature, 2025, ISBN: 978-3-032-00656-1.

@inproceedings{chen2025evaluating,

title = {Evaluating personalized beneficial interventions in the daily lives of older adults using a camera},

author = {Longfei Chen and Robert B. Fisher and Nusa Faric and Jacques Fleuriot and Subramanian Ramamoorthy },

editor = {Daniele Cafolla and Timothy Rittman and Hao Ni},

url = {https://link.springer.com/chapter/10.1007/978-3-032-00656-1_10

https://www.arxiv.org/abs/2507.19494},

doi = {10.1007/978-3-032-00656-1_10},

isbn = {978-3-032-00656-1},

year = {2025},

date = {2025-08-20},

urldate = {2025-08-20},

booktitle = {Artificial Intelligence in Healthcare (AIiH)},

volume = {16039},

pages = {131-141},

publisher = {Springer Nature},

series = {Lecture Notes in Computer Science},

abstract = {Beneficial daily activity interventions have been shown to improve both the physical and mental health of older adults. However, there is a lack of robust objective metrics and personalised strategies to measure their impact. In this study, two older adults aged over 65, living in Edinburgh, UK, selected their preferred daily interventions (mindful meals and art crafts), which were then assessed for effectiveness. The total monitoring period across both participants was 8 weeks. Their physical behaviours were continuously monitored using a non-contact, privacy-preserving camera-based system. Postural and mobility statistics were extracted using computer vision algorithms and compared across periods with and without the interventions. The results demonstrate significant behavioural changes for both participants, highlighting the effectiveness of both these activities and the monitoring system.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Learning Neuro-symbolic Dialogue Strategies for Interactive Symbol Grounding Journal Article

Rimvydas Rubavicius, Alex Lascarides, Subramanian Ramamoorthy

In: Linguistic Issues in Language Technology, vol. 20, iss. 1, 2025.

@article{rubavicius-etal-2025-strategies,

title = {Learning Neuro-symbolic Dialogue Strategies for Interactive Symbol Grounding},

author = {Rimvydas Rubavicius and Alex Lascarides and Subramanian Ramamoorthy },

url = {https://journals.colorado.edu/index.php/lilt/article/view/2457

https://github.com/assistive-autonomy/dialogue-strategies},

doi = {10.33011/lilt.v20.a1},

year = {2025},

date = {2025-08-06},

urldate = {2025-08-06},

journal = {Linguistic Issues in Language Technology},

volume = {20},

issue = {1},

abstract = {Interactive task learning studies situations in which a teacher (task instructor) interacts with a learner (task executor) to perform a novel task in an embodied environment. To successfully interpret the teacher's utterances, the learner has to perform interactive symbol grounding: it must update its prior beliefs about the mapping from symbols to visual referents each time the teacher speaks. Interactive symbol grounding is even more challenging if the learner starts out unaware of concepts that are critical to task success. In that case, the learner must use the embodied conversation to discover and adapt to unforeseen possibilities, and so must cope with a continuously expanding hypothesis space and hence a non-stationary domain model, requiring structure-level updates during interaction. In this paper, we propose a neuro-symbolic model for learning dialogue strategies for achieving interactive symbol grounding. In particular, we study the effects of enriching the model with symbolic reasoning that captures the valid consequences of quantifiers (e.g., both, every). Our hypothesis is that utilizing such reasoning makes interactive task learning more data efficient. We test this empirically via a task of interactive reference resolution, in which the learner must jointly learn a grounding model and a policy for querying the teacher to enhance its accuracy in grounding. Our results show that a learner that exploits such symbolic reasoning for both decision-making and grounding is more data-efficient than learners that ignore such linguistic insights.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

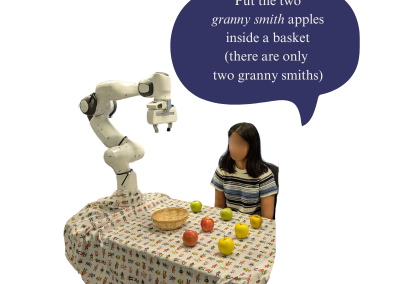

SECURE: Semantics-aware Embodied Conversation under Unawareness for Lifelong Robot Learning Proceedings Article

Rimvydas Rubavicius, Peter David Fagan, Alex Lascarides, Subramanian Ramamoorthy

In: Proceedings of The 4th Conference on Lifelong Learning Agents, PMLR, 2025.

@inproceedings{rubavicius2025securesemanticsawareembodiedconversation,

title = {SECURE: Semantics-aware Embodied Conversation under Unawareness for Lifelong Robot Learning},

author = {Rimvydas Rubavicius and Peter David Fagan and Alex Lascarides and Subramanian Ramamoorthy},

url = {https://arxiv.org/abs/2409.17755},

year = {2025},

date = {2025-08-01},

urldate = {2025-08-01},

booktitle = {Proceedings of The 4th Conference on Lifelong Learning Agents, PMLR},

abstract = {This paper addresses a challenging interactive task learning scenario we call rearrangement under unawareness: an agent must manipulate a rigid-body environment without knowing a key concept necessary for solving the task and must learn about it during deployment. For example, the user may ask to "put the two granny smith apples inside the basket", but the agent cannot correctly identify which objects in the environment are "granny smith" as the agent has not been exposed to such a concept before. We introduce SECURE, an interactive task learning policy designed to tackle such scenarios. The unique feature of SECURE is its ability to enable agents to engage in semantic analysis when processing embodied conversations and making decisions. Through embodied conversation, a SECURE agent adjusts its deficient domain model by engaging in dialogue to identify and learn about previously unforeseen possibilities. The SECURE agent learns from the user's embodied corrective feedback when mistakes are made and strategically engages in dialogue to uncover useful information about novel concepts relevant to the task. These capabilities enable the SECURE agent to generalize to new tasks with the acquired knowledge. We demonstrate in the simulated Blocksworld and the real-world apple manipulation environments that the SECURE agent, which solves such rearrangements under unawareness, is more data-efficient than agents that do not engage in embodied conversation or semantic analysis.},

howpublished = {Proceedings of The 4th Conference on Lifelong Learning Agents, PMLR},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Assistax: A Hardware-Accelerated Reinforcement Learning Benchmark for Assistive Robotics Working paper

Leonard Hinckeldey, Elliot Fosong, Elle Miller, Rimvydas Rubavicius, Trevor McInroe, Patricia Wollstadt, Christiane B. Wiebel-Herboth, Subramanian Ramamoorthy, Stefano V. Albrecht

2025.

@workingpaper{hinckeldey2025assistax,

title = {Assistax: A Hardware-Accelerated Reinforcement Learning Benchmark for Assistive Robotics},

author = {Leonard Hinckeldey and Elliot Fosong and Elle Miller and Rimvydas Rubavicius and Trevor McInroe and Patricia Wollstadt and Christiane B. Wiebel-Herboth and Subramanian Ramamoorthy and Stefano V. Albrecht},

url = {https://arxiv.org/abs/2507.21638},

year = {2025},

date = {2025-07-29},

urldate = {2025-01-01},

booktitle = {Proc. Coordination and Cooperation in Multi-Agent Reinforcement Learning Workshop (CoCoMARL), RLC},

abstract = {The development of reinforcement learning (RL) algorithms has been largely driven by ambitious challenge tasks and benchmarks. Games have dominated RL benchmarks because they present relevant challenges, are inexpensive to run and easy to understand. While games such as Go and Atari have led to many breakthroughs, they often do not directly translate to real-world embodied applications. In recognising the need to diversify RL benchmarks and addressing complexities that arise in embodied interaction scenarios, we introduce Assistax: an open-source benchmark designed to address challenges arising in assistive robotics tasks. Assistax uses JAX’s hardware acceleration for significant speed-ups for learning in physics-based simulations. In terms of open-loop wall-clock time Assistax runs up to 370 faster, compared to CPU-based alternatives, when vectorising training runs. Assistax conceptualises the interaction between an assistive robot and an active human patient using multi-agent RL to train a population of diverse partner agents against which an embodied robotic agent's zero-shot coordination capabilities can be tested. Extensive evaluation and hyperparameter tuning for popular continuous control RL and MARL algorithms provide reliable baselines and establish Assistax as a practical benchmark for advancing RL research for assistive robotics.

},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

Distributional Treatment of Real2Sim2Real for Object-Centric Agent Adaptation in Vision-Driven DLO Manipulation Journal Article

Georgios Kamaras, Subramanian Ramamoorthy

In: IEEE Robotics and Automation Letters (RA-L), vol. 10, no. 8, pp. 8075–8082, 2025, ISSN: 2377-3774.

@article{Kamaras_2025,

title = {Distributional Treatment of Real2Sim2Real for Object-Centric Agent Adaptation in Vision-Driven DLO Manipulation},

author = {Georgios Kamaras and Subramanian Ramamoorthy},

url = {https://ieeexplore.ieee.org/document/11045513},

doi = {10.1109/lra.2025.3581744},

issn = {2377-3774},

year = {2025},

date = {2025-07-20},

urldate = {2025-08-01},

journal = {IEEE Robotics and Automation Letters (RA-L)},

volume = {10},

number = {8},

pages = {8075–8082},

publisher = {Institute of Electrical and Electronics Engineers (IEEE)},

abstract = {We present an integrated (or end-to-end) framework for the Real2Sim2Real problem of manipulating deformable linear objects (DLOs) based on visual perception. Working with a parameterised set of DLOs, we use likelihood-free inference (LFI) to compute the posterior distributions for the physical parameters using which we can approximately simulate the behaviour of each specific DLO. We use these posteriors for domain randomisation while training, in simulation, object-specific visuomotor policies (i.e. assuming only visual and proprioceptive sensory) for a DLO reaching task, using model-free reinforcement learning. We demonstrate the utility of this approach by deploying sim-trained DLO manipulation policies in the real world in a zero-shot manner, i.e. without any further fine-tuning. In this context, we evaluate the capacity of a prominent LFI method to perform fine classification over the parametric set of DLOs, using only visual and proprioceptive data obtained in a dynamic manipulation trajectory. We then study the implications of the resulting domain distributions in sim-based policy learning and real-world performance.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

OPPH: A Vision-Based Operator for Measuring Body Movements for Personal Healthcare Proceedings Article

Chen Long-fei, Subramanian Ramamoorthy, Robert B Fisher

In: Computer Vision – ECCV 2024 Workshops. ECCV 2024. Lecture Notes in Computer Science, 2025.

@inproceedings{longfei2024opphvisionbasedoperatormeasuring,

title = {OPPH: A Vision-Based Operator for Measuring Body Movements for Personal Healthcare},

author = {Chen Long-fei and Subramanian Ramamoorthy and Robert B Fisher},

url = {https://link.springer.com/chapter/10.1007/978-3-031-92591-7_13

https://doi.org/10.1007/978-3-031-92591-7_13

https://arxiv.org/abs/2408.09409},

doi = {10.1007/978-3-031-92591-7_13},

year = {2025},

date = {2025-05-12},

urldate = {2025-05-12},

booktitle = {Computer Vision – ECCV 2024 Workshops. ECCV 2024. Lecture Notes in Computer Science},

volume = {15634},

abstract = {Vision-based motion estimation methods show promise in accurately and unobtrusively estimating human body motion for healthcare purposes. However, these methods are not specifically designed for healthcare purposes and face challenges in real-world applications. Human pose estimation methods often lack the accuracy needed for detecting fine-grained, subtle body movements, while optical flow-based methods struggle with poor lighting conditions and unseen real-world data. These issues result in human body motion estimation errors, particularly during critical medical situations where the body is motionless, such as during unconsciousness. To address these challenges and improve the accuracy of human body motion estimation for healthcare purposes, we propose the OPPH operator designed to enhance current vision-based motion estimation methods. This operator, which considers human body movement and noise properties, functions as a multi-stage filter. Results tested on two real-world and one synthetic human motion dataset demonstrate that the operator effectively removes real-world noise, significantly enhances the detection of motionless states, maintains the accuracy of estimating active body movements, and maintains long-term body movement trends. This method could be beneficial for analyzing both critical medical events and chronic medical conditions.},

howpublished = {In Proc. 12th International Workshop on Assistive Computer Vision and Robotics (ACVR), The European Conference on Computer Vision (ECCV), 2024},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Learning Visually Grounded Domain Ontologies via Embodied Conversation and Explanation Proceedings Article

Jonghyuk Park, Alex Lascarides, Subramanian Ramamoorthy

In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 14361-14368, 2025.

@inproceedings{park2024learningvisuallygroundeddomain,

title = {Learning Visually Grounded Domain Ontologies via Embodied Conversation and Explanation},

author = {Jonghyuk Park and Alex Lascarides and Subramanian Ramamoorthy},

url = {https://dl.acm.org/doi/10.1609/aaai.v39i13.33573

https://arxiv.org/abs/2412.09770},

doi = {10.1609/aaai.v39i13.33573},

year = {2025},

date = {2025-04-11},

urldate = {2025-04-11},

booktitle = {Proceedings of the AAAI Conference on Artificial Intelligence},

volume = {39},

number = {13},

pages = {14361-14368},

abstract = {In this paper, we offer a learning framework in which the agent's knowledge gaps are overcome through corrective feedback from a teacher whenever the agent explains its (incorrect) predictions. We test it in a low-resource visual processing scenario, in which the agent must learn to recognize distinct types of toy truck. The agent starts the learning process with no ontology about what types of trucks exist nor which parts they have, and a deficient model for recognizing those parts from visual input. The teacher's feedback to the agent's explanations addresses its lack of relevant knowledge in the ontology via a generic rule (e.g., "dump trucks have dumpers"), whereas an inaccurate part recognition is corrected by a deictic statement (e.g., "this is not a dumper"). The learner utilizes this feedback not only to improve its estimate of the hypothesis space of possible domain ontologies and probability distributions over them, but also to use those estimates to update its visual interpretation of the scene. Our experiments demonstrate that teacher-learner pairs utilizing explanations and corrections are more data-efficient than those without such a faculty.},

howpublished = {In Proc. AAAI Conference on Artificial Intelligence (AAAI-25), 2025},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

ContactFusion: Stochastic Poisson Surface Maps from Visual and Contact Sensing Working paper

Aditya Kamireddypalli, Joao Moura, Russell Buchanan, Sethu Vijayakumar, Subramanian Ramamoorthy

2025.

@workingpaper{kamireddypalli2025contactfusionstochasticpoissonsurface,

title = {ContactFusion: Stochastic Poisson Surface Maps from Visual and Contact Sensing},

author = {Aditya Kamireddypalli and Joao Moura and Russell Buchanan and Sethu Vijayakumar and Subramanian Ramamoorthy},

url = {https://arxiv.org/abs/2503.16592},

year = {2025},

date = {2025-01-01},

abstract = {Robust and precise robotic assembly entails insertion of constituent components. Insertion success is hindered when noise in scene understanding exceeds tolerance limits, especially when fabricated with tight tolerances. In this work, we propose ContactFusion which combines global mapping with local contact information, fusing point clouds with force sensing. Our method entails a Rejection Sampling based contact occupancy sensing procedure which estimates contact locations on the end-effector from Force/Torque sensing at the wrist. We demonstrate how to fuse contact with visual information into a Stochastic Poisson Surface Map (SPSMap) - a map representation that can be updated with the Stochastic Poisson Surface Reconstruction (SPSR) algorithm. We first validate the contact occupancy sensor in simulation and show its ability to detect the contact location on the robot from force sensing information. Then, we evaluate our method in a peg-in-hole task, demonstrating an improvement in the hole pose estimate with the fusion of the contact information with the SPSMap.},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

2024

Click to Grasp: Zero-Shot Precise Manipulation via Visual Diffusion Descriptors Proceedings Article

Nikolaos Tsagkas, Jack Rome, Subramanian Ramamoorthy, Oisin Mac Aodha, Chris Xiaoxuan Lu

In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 11610–11617, IEEE, 2024.

@inproceedings{Tsagkas_2024,

title = {Click to Grasp: Zero-Shot Precise Manipulation via Visual Diffusion Descriptors},

author = {Nikolaos Tsagkas and Jack Rome and Subramanian Ramamoorthy and Oisin Mac Aodha and Chris Xiaoxuan Lu},

url = {http://dx.doi.org/10.1109/IROS58592.2024.10801488},

doi = {10.1109/iros58592.2024.10801488},

year = {2024},

date = {2024-12-25},

urldate = {2024-10-01},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages = {11610–11617},

publisher = {IEEE},

abstract = {Precise manipulation that is generalizable across scenes and objects remains a persistent challenge in robotics. Current approaches for this task heavily depend on having a significant number of training instances to handle objects with pronounced visual and/or geometric part ambiguities. Our work explores the grounding of fine-grained part descriptors for precise manipulation in a zero-shot setting by utilizing web-trained text-to-image diffusion-based generative models. We tackle the problem by framing it as a dense semantic part correspondence task. Our model returns a gripper pose for manipulating a specific part, using as reference a user-defined click from a source image of a visually different instance of the same object. We require no manual grasping demonstrations as we leverage the intrinsic object geometry and features. Practical experiments in a real-world tabletop scenario validate the efficacy of our approach, demonstrating its potential for advancing semantic-aware robotics manipulation.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Achieving Dexterous Bidirectional Interaction in Uncertain Conditions for Medical Robotics Journal Article

Carlo Tiseo, Quentin Rouxel, Martin Asenov, Keyhan Kouhkiloui Babarahmati, Subramanian Ramamoorthy, Zhibin Li, Michael Mistry

In: IEEE Transactions on Medical Robotics and Bionics (BioRob), vol. 7, no. 1, pp. 43–50, 2024, ISSN: 2576-3202.

@article{Tiseo_2025,

title = {Achieving Dexterous Bidirectional Interaction in Uncertain Conditions for Medical Robotics},

author = {Carlo Tiseo and Quentin Rouxel and Martin Asenov and Keyhan Kouhkiloui Babarahmati and Subramanian Ramamoorthy and Zhibin Li and Michael Mistry},

url = {http://dx.doi.org/10.1109/TMRB.2024.3506163

https://ieeexplore.ieee.org/document/10767388

https://arxiv.org/abs/2206.09906},

doi = {10.1109/tmrb.2024.3506163},

issn = {2576-3202},

year = {2024},

date = {2024-11-25},

urldate = {2025-02-01},

journal = {IEEE Transactions on Medical Robotics and Bionics (BioRob)},

volume = {7},

number = {1},

pages = {43–50},

publisher = {Institute of Electrical and Electronics Engineers (IEEE)},

abstract = {Medical robotics can help improve and extend the reach of healthcare services. A major challenge for medical robots is the complex physical interaction between the robot and the patients which is required to be safe. This work presents the preliminary evaluation of a recently introduced control architecture based on the Fractal Impedance Control (FIC) in medical applications. The deployed FIC architecture is robust to delay between the master and the replica robots. It can switch online between an admittance and impedance behaviour, and it is robust to interaction with unstructured environments. Our experiments analyse three scenarios: teleoperated surgery, rehabilitation, and remote ultrasound scan. The experiments did not require any adjustment of the robot tuning, which is essential in medical applications where the operators do not have an engineering background required to tune the controller. Our results show that is possible to teleoperate the robot to cut using a scalpel, do an ultrasound scan, and perform remote occupational therapy. However, our experiments also highlighted the need for a better robots embodiment to precisely control the system in 3D dynamic tasks.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Learning from Demonstration with Implicit Nonlinear Dynamics Models Working paper

Peter David Fagan, Subramanian Ramamoorthy

2024.

@workingpaper{fagan2025learningdemonstrationimplicitnonlinear,

title = {Learning from Demonstration with Implicit Nonlinear Dynamics Models},

author = {Peter David Fagan and Subramanian Ramamoorthy},

url = {https://arxiv.org/abs/2409.18768},

year = {2024},

date = {2024-09-27},

urldate = {2025-01-01},

abstract = {Learning from Demonstration (LfD) is a useful paradigm for training policies that solve tasks involving complex motions, such as those encountered in robotic manipulation. In practice, the successful application of LfD requires overcoming error accumulation during policy execution, i.e. the problem of drift due to errors compounding over time and the consequent out-of-distribution behaviours. Existing works seek to address this problem through scaling data collection, correcting policy errors with a human-in-the-loop, temporally ensembling policy predictions or through learning a dynamical system model with convergence guarantees. In this work, we propose and validate an alternative approach to overcoming this issue. Inspired by reservoir computing, we develop a recurrent neural network layer that includes a fixed nonlinear dynamical system with tunable dynamical properties for modelling temporal dynamics. We validate the efficacy of our neural network layer on the task of reproducing human handwriting motions using the LASA Human Handwriting Dataset. Through empirical experiments we demonstrate that incorporating our layer into existing neural network architectures addresses the issue of compounding errors in LfD. Furthermore, we perform a comparative evaluation against existing approaches including a temporal ensemble of policy predictions and an Echo State Network (ESN) implementation. We find that our approach yields greater policy precision and robustness on the handwriting task while also generalising to multiple dynamics regimes and maintaining competitive latency scores.},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

Generating robotic elliptical excisions with human-like tool-tissue interactions Proceedings Article

Arturas Straizys, Michael Burke, Subramanian Ramamoorthy

In: IEEE International Conference on Robotics and Automation (ICRA), pp. 15017-15023, 2024.

@inproceedings{straizys2023generatingroboticellipticalexcisions,

title = {Generating robotic elliptical excisions with human-like tool-tissue interactions},

author = {Arturas Straizys and Michael Burke and Subramanian Ramamoorthy},

url = {https://ieeexplore.ieee.org/document/10610990

https://arxiv.org/abs/2309.12219

https://www.youtube.com/watch?v=dGrn-OBtOms},

doi = {10.1109/ICRA57147.2024.10610990},

year = {2024},

date = {2024-08-08},

urldate = {2024-08-08},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

pages = {15017-15023},

abstract = {In surgery, the application of appropriate force levels is critical for the success and safety of a given procedure. While many studies are focused on measuring in situ forces, little attention has been devoted to relating these observed forces to surgical techniques. Answering questions like "Can certain changes to a surgical technique result in lower forces and increased safety margins?" could lead to improved surgical practice, and importantly, patient outcomes. However, such studies would require a large number of trials and professional surgeons, which is generally impractical to arrange. Instead, we show how robots can learn several variations of a surgical technique from a smaller number of surgical demonstrations and interpolate learnt behaviour via a parameterised skill model. This enables a large number of trials to be performed by a robotic system and the analysis of surgical techniques and their downstream effects on tissue. Here, we introduce a parameterised model of the elliptical excision skill and apply a Bayesian optimisation scheme to optimise the excision behaviour with respect to expert ratings, as well as individual characteristics of excision forces. Results show that the proposed framework can successfully align the generated robot behaviour with subjects across varying levels of proficiency in terms of excision forces.},

howpublished = {In Proc. IEEE International Conference on Robotics and Automation (ICRA), 2024},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

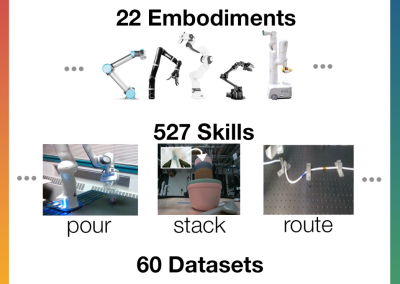

Open X-Embodiment: Robotic Learning Datasets and RT-X Models Best Paper Proceedings Article

Open-X Embodiment Collaboration

In: IEEE International Conference on Robotics and Automation (ICRA), pp. 6892-6903, 2024.

@inproceedings{embodimentcollaboration2025openxembodimentroboticlearning,

title = {Open X-Embodiment: Robotic Learning Datasets and RT-X Models},

author = {Open-X Embodiment Collaboration},

url = {https://arxiv.org/abs/2310.08864

https://robotics-transformer-x.github.io},

doi = {10.1109/ICRA57147.2024.10611477},

year = {2024},

date = {2024-08-08},

urldate = {2024-05-01},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

pages = {6892-6903},

abstract = {Large, high-capacity models trained on diverse datasets have shown remarkable successes on efficiently tackling downstream applications. In domains from NLP to Computer Vision, this has led to a consolidation of pretrained models, with general pretrained backbones serving as a starting point for many applications. Can such a consolidation happen in robotics? Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train generalist X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms.},

howpublished = {In Proc. IEEE International Conference on Robotics and Automation (ICRA), 2024.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

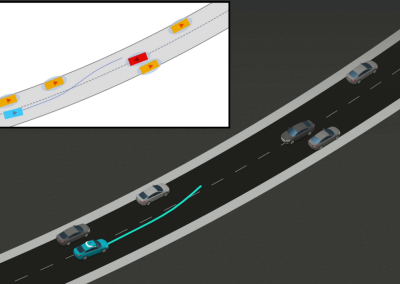

Adaptive Splitting of Reusable Temporal Monitors for Rare Traffic Violations Proceedings Article

Craig Innes, Subramanian Ramamoorthy

In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 12386-12393, 2024, ISBN: 2153-0866.

@inproceedings{innes2024adaptivesplittingreusabletemporal,

title = {Adaptive Splitting of Reusable Temporal Monitors for Rare Traffic Violations},

author = {Craig Innes and Subramanian Ramamoorthy},

url = {https://ieeexplore.ieee.org/document/10802747

https://arxiv.org/abs/2405.15771},

doi = {10.1109/IROS58592.2024.10802747},

isbn = {2153-0866},

year = {2024},

date = {2024-07-24},

urldate = {2024-01-01},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages = {12386-12393},

abstract = {Autonomous Vehicles (AVs) are often tested in simulation to estimate the probability they will violate safety specifications. Two common issues arise when using existing techniques to produce this estimation: If violations occur rarely, simple Monte-Carlo sampling techniques can fail to produce efficient estimates; if simulation horizons are too long, importance sampling techniques (which learn proposal distributions from past simulations) can fail to converge. This paper addresses both issues by interleaving rare-event sampling techniques with online specification monitoring algorithms. We use adaptive multi-level splitting to decompose simulations into partial trajectories, then calculate the distance of those partial trajectories to failure by leveraging robustness metrics from Signal Temporal Logic (STL). By caching those partial robustness metric values, we can efficiently re-use computations across multiple sampling stages. Our experiments on an interstate lane-change scenario show our method is viable for testing simulated AV-pipelines, efficiently estimating failure probabilities for STL specifications based on real traffic rules. We produce better estimates than Monte-Carlo and importance sampling in fewer simulations.},

howpublished = {In Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

DROID: A Large-Scale In-The-Wild Robot Manipulation Dataset Proceedings Article

Alexander Khazatsky, Karl Pertsch, Suraj Nair, Ashwin Balakrishna, Sudeep Dasari, Siddharth Karamcheti, Soroush Nasiriany, Mohan Kumar Srirama, Lawrence Yunliang Chen, Kirsty Ellis, Peter David Fagan, Joey Hejna, Masha Itkina, Marion Lepert, Yecheng Jason Ma, Patrick Tree Miller, Jimmy Wu, Suneel Belkhale, Shivin Dass, Huy Ha, Arhan Jain, Abraham Lee, Youngwoon Lee, Marius Memmel, Sungjae Park, Ilija Radosavovic, Kaiyuan Wang, Albert Zhan, Kevin Black, Cheng Chi, Kyle Beltran Hatch, Shan Lin, Jingpei Lu, Jean Mercat, Abdul Rehman, Pannag R. Sanketi, Archit Sharma, Cody Simpson, Quan Vuong, Homer Rich Walke, Blake Wulfe, Ted Xiao, Jonathan Heewon Yang, Arefeh Yavary, Tony Z. Zhao, Christopher Agia, Rohan Baijal, Mateo Guaman Castro, Daphne Chen, Qiuyu Chen, Trinity Chung, Jaimyn Drake, Ethan Paul Foster, Jensen Gao, David Antonio Herrera, Minho Heo, Kyle Hsu, Jiaheng Hu, Donovon Jackson, Charlotte Le, Yunshuang Li, Roy Lin, Zehan Ma, Abhiram Maddukuri, Suvir Mirchandani, Daniel Morton, Tony Nguyen, Abigail O'Neill, Rosario Scalise, Derick Seale, Victor Son, Stephen Tian, Emi Tran, Andrew E. Wang, Yilin Wu, Annie Xie, Jingyun Yang, Patrick Yin, Yunchu Zhang, Osbert Bastani, Glen Berseth, Jeannette Bohg, Ken Goldberg, Abhinav Gupta, Abhishek Gupta, Dinesh Jayaraman, Joseph J. Lim, Jitendra Malik, Roberto Martín-Martín, Subramanian Ramamoorthy, Dorsa Sadigh, Shuran Song, Jiajun Wu, Michael C. Yip, Yuke Zhu, Thomas Kollar, Sergey Levine, Chelsea Finn

In: Proceedings of Robotics: Science and Systems, Delft, Netherlands, 2024, ISBN: 979-8-9902848-0-7.

@inproceedings{khazatsky2024droid,

title = {DROID: A Large-Scale In-The-Wild Robot Manipulation Dataset},

author = {Alexander Khazatsky and Karl Pertsch and Suraj Nair and Ashwin Balakrishna and Sudeep Dasari and Siddharth Karamcheti and Soroush Nasiriany and Mohan Kumar Srirama and Lawrence Yunliang Chen and Kirsty Ellis and Peter David Fagan and Joey Hejna and Masha Itkina and Marion Lepert and Yecheng Jason Ma and Patrick Tree Miller and Jimmy Wu and Suneel Belkhale and Shivin Dass and Huy Ha and Arhan Jain and Abraham Lee and Youngwoon Lee and Marius Memmel and Sungjae Park and Ilija Radosavovic and Kaiyuan Wang and Albert Zhan and Kevin Black and Cheng Chi and Kyle Beltran Hatch and Shan Lin and Jingpei Lu and Jean Mercat and Abdul Rehman and Pannag R. Sanketi and Archit Sharma and Cody Simpson and Quan Vuong and Homer Rich Walke and Blake Wulfe and Ted Xiao and Jonathan Heewon Yang and Arefeh Yavary and Tony Z. Zhao and Christopher Agia and Rohan Baijal and Mateo Guaman Castro and Daphne Chen and Qiuyu Chen and Trinity Chung and Jaimyn Drake and Ethan Paul Foster and Jensen Gao and David Antonio Herrera and Minho Heo and Kyle Hsu and Jiaheng Hu and Donovon Jackson and Charlotte Le and Yunshuang Li and Roy Lin and Zehan Ma and Abhiram Maddukuri and Suvir Mirchandani and Daniel Morton and Tony Nguyen and Abigail O'Neill and Rosario Scalise and Derick Seale and Victor Son and Stephen Tian and Emi Tran and Andrew E. Wang and Yilin Wu and Annie Xie and Jingyun Yang and Patrick Yin and Yunchu Zhang and Osbert Bastani and Glen Berseth and Jeannette Bohg and Ken Goldberg and Abhinav Gupta and Abhishek Gupta and Dinesh Jayaraman and Joseph J. Lim and Jitendra Malik and Roberto Martín-Martín and Subramanian Ramamoorthy and Dorsa Sadigh and Shuran Song and Jiajun Wu and Michael C. Yip and Yuke Zhu and Thomas Kollar and Sergey Levine and Chelsea Finn},

url = {https://www.roboticsproceedings.org/rss20/p120.html

https://droid-dataset.github.io},

doi = {10.15607/RSS.2024.XX.120},

isbn = {979-8-9902848-0-7},

year = {2024},

date = {2024-07-01},

urldate = {2024-07-01},

booktitle = {Proceedings of Robotics: Science and Systems},

address = {Delft, Netherlands},

abstract = {The creation of large, diverse, high-quality robot manipulation datasets is an important stepping stone on the path toward more capable and robust robotic manipulation policies. However, creating such datasets is challenging: collecting robot manipulation data in diverse environments poses logistical and safety challenges and requires substantial investments in hardware and human labour. As a result, even the most general robot manipulation policies today are mostly trained on data collected in a small number of environments with limited scene and task diversity. In this work, we introduce DROID (Distributed Robot Interaction Dataset), a diverse robot manipulation dataset with 76k demonstration trajectories or 350 hours of interaction data, collected across 564 scenes and 84 tasks by 50 data collectors in North America, Asia, and Europe over the course of 12 months. We demonstrate that training with DROID leads to policies with higher performance and improved generalization ability. We open source the full dataset, policy learning code, and a detailed guide for reproducing our robot hardware setup.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

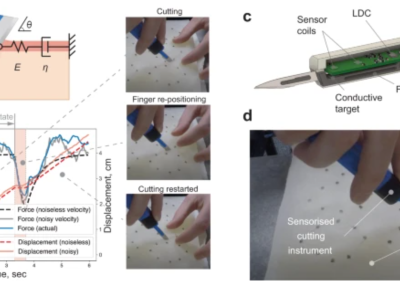

Learning human-like skills for cutting soft objects using force sensing PhD Thesis

Artūras Straižys

University of Edinburgh, 2024.

@phdthesis{straizys2024,

title = {Learning human-like skills for cutting soft objects using force sensing},

author = {Artūras Straižys},

url = {http://dx.doi.org/10.7488/era/4378},

year = {2024},

date = {2024-03-21},

urldate = {2024-03-21},

school = {University of Edinburgh},

abstract = {This thesis investigates the application of force sensing to learn robotic cutting of soft

objects.

The automation of deformable object cutting is a promising prospect for many important

areas, ranging from the food processing industry to soft tissue surgery. However,

the remarkable robustness with which humans perform these tasks is far beyond the

capabilities of current robotics. Humans achieve this robustness by employing various

cutting strategies that rely on tactile feedback. This thesis investigates these abilities,

ways of sensing and modeling these, and approaches to exploit these for robotic

cutting, through four key research contributions.

The first formulates and confirms the hypothesis that forces play a key role in the

robustness of cutting skills. This study investigated the human skills of scooping a

grapefruit with a knife. The insight behind the hypothesis is that humans guide the

knife’s movement using tactile cues that arise at the pulp/peel interface. Experiments

conducted in this thesis indicate that similar torque-based movement adaptation is an

effective strategy in robotic grapefruit scooping. The proposed method can be used

in many practical applications where cutting along the medium boundary is required;

for example, in surgical excision of solid tumours within soft tissue.

A second study considered the practical implementation of robotic cutting systems

that must account for a number of constraints. In many cutting tasks, the required

adaptation of cutting movement is subject to a non-holonomic constraint that restricts

the lateral motion of the blade. This makes it difficult to encode cutting motions using

dynamical system-based methods, such as dynamical movement primitives (DMPs),

otherwise well suited for learning complex reactive behaviours. The non-holonomic

DMPs proposed in this thesis introduce a coupling term derived by the Udwadia-

Kalaba method that guarantees run-time satisfaction of a wide range of constraints,

including non-holonomic. We demonstrate how this approach can be applied to learn

robotic cutting skills from demonstration.

A third study on the role of forces in surgical excisions has shown that the force

modality contains valuable information for skill understanding. It was found that incision

forces consist of subject-specific signatures that reflect excision assessment

by experts. We proposed a generative model of excision forces, which decomposes

cutting behaviour into amplitude and temporal components that encode meaningful

characteristics of the observed behaviour. Along with a novel sensorised instrument

developed for this study, this model can form the basis for surgical training systems

with objective skill assessment and opens up many opportunities for learning humanlike

robotic excision of soft tissues.

Finally, these approaches were combined for learning human-like robotic elliptical

excision skills, following an approach using the previously developed sensorised instrument

and the model of elliptical excision forces.We introduced a generative model

for pose trajectories of the blade in the elliptical excision task and used it to encode

the observed excision behaviours. We demonstrate how the proposed model of excision

forces can be employed to optimise the robotic behaviour with respect to the

performance assessment of experts and the desired human-like characteristics of

cutting forces.

This work let us analyse complex cutting tasks, techniques and skills from human

demonstrations. Such analysis can lead us to understand better what underlies these

skills in humans and how these can be replicated by a robot.},

howpublished = {Edinburgh Research Archive (ERA)},

keywords = {},

pubstate = {published},

tppubtype = {phdthesis}

}

objects.

The automation of deformable object cutting is a promising prospect for many important

areas, ranging from the food processing industry to soft tissue surgery. However,

the remarkable robustness with which humans perform these tasks is far beyond the

capabilities of current robotics. Humans achieve this robustness by employing various

cutting strategies that rely on tactile feedback. This thesis investigates these abilities,

ways of sensing and modeling these, and approaches to exploit these for robotic

cutting, through four key research contributions.

The first formulates and confirms the hypothesis that forces play a key role in the

robustness of cutting skills. This study investigated the human skills of scooping a

grapefruit with a knife. The insight behind the hypothesis is that humans guide the

knife’s movement using tactile cues that arise at the pulp/peel interface. Experiments

conducted in this thesis indicate that similar torque-based movement adaptation is an

effective strategy in robotic grapefruit scooping. The proposed method can be used

in many practical applications where cutting along the medium boundary is required;

for example, in surgical excision of solid tumours within soft tissue.

A second study considered the practical implementation of robotic cutting systems

that must account for a number of constraints. In many cutting tasks, the required

adaptation of cutting movement is subject to a non-holonomic constraint that restricts

the lateral motion of the blade. This makes it difficult to encode cutting motions using

dynamical system-based methods, such as dynamical movement primitives (DMPs),

otherwise well suited for learning complex reactive behaviours. The non-holonomic

DMPs proposed in this thesis introduce a coupling term derived by the Udwadia-

Kalaba method that guarantees run-time satisfaction of a wide range of constraints,

including non-holonomic. We demonstrate how this approach can be applied to learn

robotic cutting skills from demonstration.

A third study on the role of forces in surgical excisions has shown that the force

modality contains valuable information for skill understanding. It was found that incision

forces consist of subject-specific signatures that reflect excision assessment

by experts. We proposed a generative model of excision forces, which decomposes

cutting behaviour into amplitude and temporal components that encode meaningful

characteristics of the observed behaviour. Along with a novel sensorised instrument

developed for this study, this model can form the basis for surgical training systems

with objective skill assessment and opens up many opportunities for learning humanlike

robotic excision of soft tissues.

Finally, these approaches were combined for learning human-like robotic elliptical

excision skills, following an approach using the previously developed sensorised instrument

and the model of elliptical excision forces.We introduced a generative model

for pose trajectories of the blade in the elliptical excision task and used it to encode

the observed excision behaviours. We demonstrate how the proposed model of excision

forces can be employed to optimise the robotic behaviour with respect to the

performance assessment of experts and the desired human-like characteristics of

cutting forces.

This work let us analyse complex cutting tasks, techniques and skills from human

demonstrations. Such analysis can lead us to understand better what underlies these

skills in humans and how these can be replicated by a robot.

Unobtrusive Monitoring of Physical Weakness: A Simulated Approach Working paper

Chen Long-fei, Muhammad Ahmed Raza, Craig Innes, Subramanian Ramamoorthy, Robert B. Fisher

2024.

@workingpaper{longfei2024unobtrusivemonitoringphysicalweakness,

title = {Unobtrusive Monitoring of Physical Weakness: A Simulated Approach},

author = {Chen Long-fei and Muhammad Ahmed Raza and Craig Innes and Subramanian Ramamoorthy and Robert B. Fisher},

url = {https://arxiv.org/abs/2406.10045},

year = {2024},

date = {2024-01-01},

urldate = {2024-01-01},

abstract = {Aging and chronic conditions affect older adults' daily lives, making early detection of developing health issues crucial. Weakness, common in many conditions, alters physical movements and daily activities subtly. However, detecting such changes can be challenging due to their subtle and gradual nature. To address this, we employ a non-intrusive camera sensor to monitor individuals' daily sitting and relaxing activities for signs of weakness. We simulate weakness in healthy subjects by having them perform physical exercise and observing the behavioral changes in their daily activities before and after workouts. The proposed system captures fine-grained features related to body motion, inactivity, and environmental context in real-time while prioritizing privacy. A Bayesian Network is used to model the relationships between features, activities, and health conditions. We aim to identify specific features and activities that indicate such changes and determine the most suitable time scale for observing the change. Results show 0.97 accuracy in distinguishing simulated weakness at the daily level. Fine-grained behavioral features, including non-dominant upper body motion speed and scale, and inactivity distribution, along with a 300-second window, are found most effective. However, individual-specific models are recommended as no universal set of optimal features and activities was identified across all participants.},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

2023

On Specifying for Trustworthiness Journal Article

Dhaminda B. Abeywickrama, Amel Bennaceur, Greg Chance, Yiannis Demiris, Anastasia Kordoni, Mark Levine, Luke Moffat, Luc Moreau, Mohammad Reza Mousavi, Bashar Nuseibeh, Subramanian Ramamoorthy, Jan Oliver Ringert, James Wilson, Shane Windsor, Kerstin Eder

In: Communications of the ACM, vol. 67, iss. 1, pp. 98–109, 2023, ISSN: 1557-7317.

@article{Abeywickrama_2023,

title = {On Specifying for Trustworthiness},

author = {Dhaminda B. Abeywickrama and Amel Bennaceur and Greg Chance and Yiannis Demiris and Anastasia Kordoni and Mark Levine and Luke Moffat and Luc Moreau and Mohammad Reza Mousavi and Bashar Nuseibeh and Subramanian Ramamoorthy and Jan Oliver Ringert and James Wilson and Shane Windsor and Kerstin Eder},

url = {https://dl.acm.org/doi/10.1145/3624699

https://arxiv.org/abs/2206.11421},

doi = {10.1145/3624699},

issn = {1557-7317},

year = {2023},

date = {2023-12-21},

urldate = {2023-12-21},

journal = {Communications of the ACM},

volume = {67},

issue = {1},

pages = {98–109},

publisher = {Association for Computing Machinery (ACM)},

abstract = {As autonomous systems (AS) increasingly become part of our daily lives, ensuring their trustworthiness is crucial. In order to demonstrate the trustworthiness of an AS, we first need to specify what is required for an AS to be considered trustworthy. This roadmap paper identifies key challenges for specifying for trustworthiness in AS, as identified during the "Specifying for Trustworthiness" workshop held as part of the UK Research and Innovation (UKRI) Trustworthy Autonomous Systems (TAS) programme. We look across a range of AS domains with consideration of the resilience, trust, functionality, verifiability, security, and governance and regulation of AS and identify some of the key specification challenges in these domains. We then highlight the intellectual challenges that are involved with specifying for trustworthiness in AS that cut across domains and are exacerbated by the inherent uncertainty involved with the environments in which AS need to operate.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Anticipating Accidents through Reasoned Simulation Proceedings Article

Craig Innes, Andrew Ireland, Yuhui Lin, Subramanian Ramamoorthy

In: Proceedings of the First International Symposium on Trustworthy Autonomous Systems (TAS), Association for Computing Machinery (ACM), 2023, ISSN: 9798400707346.

@inproceedings{Innes2023,

title = {Anticipating Accidents through Reasoned Simulation},

author = {Craig Innes and Andrew Ireland and Yuhui Lin and Subramanian Ramamoorthy},

url = {https://dl.acm.org/doi/10.1145/3597512.3599698},

doi = {10.1145/3597512.3599698},

issn = {9798400707346},

year = {2023},

date = {2023-07-11},

urldate = {2023-07-11},

booktitle = {Proceedings of the First International Symposium on Trustworthy Autonomous Systems (TAS)},

volume = {1},

number = {4},

publisher = {Association for Computing Machinery (ACM)},

abstract = {A key goal of the System-Theoretic Process Analysis (STPA) hazard analysis technique is the identification of loss scenarios – causal factors that could potentially lead to an accident. We propose an approach that aims to assist engineers in identifying potential loss scenarios that are associated with flawed assumptions about a system’s intended operational environment. Our approach combines aspects of STPA with formal modelling and simulation. Currently, we are at a proof-of-concept stage and illustrate the approach using a case study based upon a simple car door locking system. In terms of the formal modelling, we use Extended Logic Programming (ELP) and on the simulation side, we use the CARLA simulator for autonomous driving. We make use of the problem frames approach to requirements engineering to bridge between the informal aspects of STPA and our formal modelling.},

howpublished = {In Proc. International Symposium on Trustworthy Autonomous Systems (TAS)},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Learning rewards from exploratory demonstrations using probabilistic temporal ranking Journal Article

Michael Burke, Katie Lu, Daniel Angelov, Artūras Straižys, Craig Innes, Kartic Subr, Subramanian Ramamoorthy

In: Autonomous Robots, vol. 47, no. 6, pp. 733–751, 2023, ISSN: 1573-7527.

@article{Burke2023,

title = {Learning rewards from exploratory demonstrations using probabilistic temporal ranking},

author = {Michael Burke and Katie Lu and Daniel Angelov and Artūras Straižys and Craig Innes and Kartic Subr and Subramanian Ramamoorthy},

url = {https://doi.org/10.1007/s10514-023-10120-w

https://sites.google.com/view/ultrasound-scanner

https://www.youtube.com/watch?v=AzgIrblR0ME},

doi = {10.1007/s10514-023-10120-w},

issn = {1573-7527},

year = {2023},

date = {2023-07-10},

urldate = {2023-08-00},

journal = {Autonomous Robots},

volume = {47},

number = {6},

pages = {733--751},

publisher = {Springer Science and Business Media LLC},

abstract = {Informative path-planning is a well established approach to visual-servoing and active viewpoint selection in robotics, but typically assumes that a suitable cost function or goal state is known. This work considers the inverse problem, where the goal of the task is unknown, and a reward function needs to be inferred from exploratory example demonstrations provided by a demonstrator, for use in a downstream informative path-planning policy. Unfortunately, many existing reward inference strategies are unsuited to this class of problems, due to the exploratory nature of the demonstrations. In this paper, we propose an alternative approach to cope with the class of problems where these sub-optimal, exploratory demonstrations occur. We hypothesise that, in tasks which require discovery, successive states of any demonstration are progressively more likely to be associated with a higher reward, and use this hypothesis to generate time-based binary comparison outcomes and infer reward functions that support these ranks, under a probabilistic generative model. We formalise this probabilistic temporal ranking approach and show that it improves upon existing approaches to perform reward inference for autonomous ultrasound scanning, a novel application of learning from demonstration in medical imaging while also being of value across a broad range of goal-oriented learning from demonstration tasks.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Testing Rare Downstream Safety Violations via Upstream Adaptive Sampling of Perception Error Models Proceedings Article

Craig Innes, Subramanian Ramamoorthy

In: IEEE International Conference on Robotics and Automation (ICRA), 2023, ISBN: 979-8-3503-2365-8.

@inproceedings{innes2023testingraredownstreamsafety,

title = {Testing Rare Downstream Safety Violations via Upstream Adaptive Sampling of Perception Error Models},

author = {Craig Innes and Subramanian Ramamoorthy},

url = {https://ieeexplore.ieee.org/document/10161501

https://arxiv.org/abs/2209.09674},

doi = {10.1109/ICRA48891.2023.10161501},

isbn = {979-8-3503-2365-8},

year = {2023},

date = {2023-07-04},

urldate = {2023-01-01},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

abstract = {Testing black-box perceptual-control systems in simulation faces two difficulties. Firstly, perceptual inputs in simulation lack the fidelity of real-world sensor inputs. Secondly, for a reasonably accurate perception system, encountering a rare failure trajectory may require running infeasibly many simulations. This paper combines perception error models -- surrogates for a sensor-based detection system -- with state-dependent adaptive importance sampling. This allows us to efficiently assess the rare failure probabilities for real-world perceptual control systems within simulation. Our experiments with an autonomous braking system equipped with an RGB obstacle-detector show that our method can calculate accurate failure probabilities with an inexpensive number of simulations. Further, we show how choice of safety metric can influence the process of learning proposal distributions capable of reliably sampling high-probability failures.},

howpublished = {In Proc. IEEE International Conference on Robotics and Automation (ICRA), 2023},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Learning robotic cutting from demonstration: Non-holonomic DMPs using the Udwadia-Kalaba method Proceedings Article

Artūras Straižys, Michael Burke, Subramanian Ramamoorthy

In: IEEE International Conference on Robotics and Automation (ICRA), 2023, ISBN: 979-8-3503-2365-8.

@inproceedings{straižys2022learningroboticcuttingdemonstration,

title = {Learning robotic cutting from demonstration: Non-holonomic DMPs using the Udwadia-Kalaba method},

author = {Artūras Straižys and Michael Burke and Subramanian Ramamoorthy},

url = {https://ieeexplore.ieee.org/document/10160917

https://arxiv.org/abs/2209.12039

https://github.com/straizys/nonholonomic-dmp},

doi = {10.1109/ICRA48891.2023.10160917},

isbn = {979-8-3503-2365-8},

year = {2023},

date = {2023-07-04},

urldate = {2022-01-01},

booktitle = { IEEE International Conference on Robotics and Automation (ICRA)},

abstract = {Dynamic Movement Primitives (DMPs) offer great versatility for encoding, generating and adapting complex end-effector trajectories. DMPs are also very well suited to learning manipulation skills from human demonstration. However, the reactive nature of DMPs restricts their applicability for tool use and object manipulation tasks involving non-holonomic constraints, such as scalpel cutting or catheter steering. In this work, we extend the Cartesian space DMP formulation by adding a coupling term that enforces a pre-defined set of non-holonomic constraints. We obtain the closed-form expression for the constraint forcing term using the Udwadia-Kalaba method. This approach offers a clean and practical solution for guaranteed constraint satisfaction at run-time. Further, the proposed analytical form of the constraint forcing term enables efficient trajectory optimization subject to constraints. We demonstrate the usefulness of this approach by showing how we can learn robotic cutting skills from human demonstration.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

A generative force model for surgical skill quantification using sensorised instruments Journal Article

Artūras Straižys, Michael Burke, Paul M. Brennan, Subramanian Ramamoorthy

In: Communications Engineering, vol. 2, no. 36, 2023, ISSN: 2731-3395.

@article{Straižys2023,

title = {A generative force model for surgical skill quantification using sensorised instruments},

author = {Artūras Straižys and Michael Burke and Paul M. Brennan and Subramanian Ramamoorthy},

url = {https://www.nature.com/articles/s44172-023-00086-z},

doi = {10.1038/s44172-023-00086-z},

issn = {2731-3395},

year = {2023},

date = {2023-06-10},

urldate = {2023-12-00},

journal = {Communications Engineering},

volume = {2},

number = {36},

publisher = {Springer Science and Business Media LLC},

abstract = {Surgical skill requires the manipulation of soft viscoelastic media. Its measurement through generative models is essential both for accurate quantification of surgical ability and for eventual automation in robotic platforms. Here we describe a sensorised scalpel, along with a generative model to assess surgical skill in elliptical excision, a representative manipulation task. Our approach allows us to capture temporal features via data collection and downstream analysis. We demonstrate that incision forces carry information that is relevant for skill interpretation, but inaccessible via conventional descriptive statistics. We tested our approach on 12 medical students and two practicing surgeons using a tissue phantom mimicking the properties of human skin. We demonstrate that our approach can bring deeper insight into performance analysis than traditional time and motion studies, and help to explain subjective assessor skill ratings. Our technique could be useful in applications spanning forensics, pathology as well as surgical skill quantification.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

DiPA: Probabilistic Multi-Modal Interactive Prediction for Autonomous Driving Journal Article

Anthony Knittel, Majd Hawasly, Stefano V. Albrecht, John Redford, Subramanian Ramamoorthy

In: IEEE Robotics and Automation Letters (RA-L), vol. 8, no. 8, pp. 4887–4894, 2023, ISSN: 2377-3774, (Workd done at FiveAI).

@article{Knittel_2023,

title = {DiPA: Probabilistic Multi-Modal Interactive Prediction for Autonomous Driving},

author = {Anthony Knittel and Majd Hawasly and Stefano V. Albrecht and John Redford and Subramanian Ramamoorthy},

url = {http://dx.doi.org/10.1109/LRA.2023.3284355},

doi = {10.1109/lra.2023.3284355},

issn = {2377-3774},

year = {2023},

date = {2023-06-08},

urldate = {2023-06-08},

journal = {IEEE Robotics and Automation Letters (RA-L)},

volume = {8},

number = {8},

pages = {4887–4894},

publisher = {Institute of Electrical and Electronics Engineers (IEEE)},

abstract = {Accurate prediction is important for operating an autonomous vehicle in interactive scenarios. Prediction must be fast, to support multiple requests from a planner exploring a range of possible futures. The generated predictions must accurately represent the probabilities of predicted trajectories, while also capturing different modes of behaviour (such as turning left vs continuing straight at a junction). To this end, we present DiPA, an interactive predictor that addresses these challenging requirements. Previous interactive prediction methods use an encoding of k-mode-samples, which under-represents the full distribution. Other methods optimise closest-mode evaluations, which test whether one of the predictions is similar to the ground-truth, but allow additional unlikely predictions to occur, over-representing unlikely predictions. DiPA addresses these limitations by using a Gaussian-Mixture-Model to encode the full distribution, and optimising predictions using both probabilistic and closest-mode measures. These objectives respectively optimise probabilistic accuracy and the ability to capture distinct behaviours, and there is a challenging trade-off between them. We are able to solve both together using a novel training regime. DiPA achieves new state-of-the-art performance on the INTERACTION and NGSIM datasets, and improves over the baseline (MFP) when both closest-mode and probabilistic evaluations are used. This demonstrates effective prediction for supporting a planner on interactive scenarios.},

note = {Workd done at FiveAI},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Interactive Acquisition of Fine-grained Visual Concepts by Exploiting Semantics of Generic Characterizations in Discourse Best Paper Proceedings Article

Jonghyuk Park, Alex Lascarides, Subramanian Ramamoorthy

In: Proceedings of International Conference on Computational Semantics (IWCS), pp. 318-331, Association for Computational Linguistics, 2023.

@inproceedings{park2023interactiveacquisitionfinegrainedvisual,

title = {Interactive Acquisition of Fine-grained Visual Concepts by Exploiting Semantics of Generic Characterizations in Discourse},

author = {Jonghyuk Park and Alex Lascarides and Subramanian Ramamoorthy},

url = {https://aclanthology.org/2023.iwcs-1.33/

https://arxiv.org/abs/2305.03461},

year = {2023},

date = {2023-06-01},

urldate = {2023-06-01},

booktitle = {Proceedings of International Conference on Computational Semantics (IWCS)},

volume = {1},

pages = {318-331},

publisher = {Association for Computational Linguistics},